When to Automate Testing?

Note: The following essay applies to software developed for an upgrades-based business model. While it may apply to other software business models, I make no attempt to defend that assertion. Also, see my disclaimer if you think this is more than just my personal opinion of the world.

The Problem

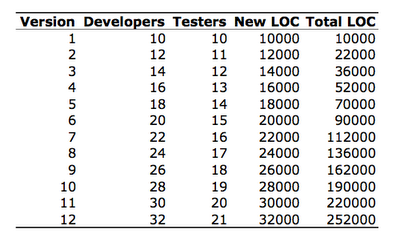

In the beginning, you're a small team, maybe 10 developers and 10 testers. (Okay, this is a huge team, but I'm trying to make the numbers easy, work with me!) You ship your version 1.0 and it's a huge success and you make loads of money! You also get lots of feedback on what could be better. So, you go to work on version 2.0. Some of what you need to make version 2.0 great is more developers. You hire one or two more developers and one or two more testers, but there is a problem: the testers must test everything in version 1.0 plus all the new stuff scheduled for 2.0. You've hired exceptional testers, and they dig in and with long hours, they are able to test sufficiently, barely, and you ship 2.0. Everyone attends the big ship party! Whoo! After a while, you look at your finances, and realize that while 2.0 was much better than 1.0, there's still a lot that could be better and your customers make that very clear. So the market for your product is not saturated which means there's still lots of upside. On top of that, your sales team informs you that by just adding feature X along with feature Y you can expand your potential market by at least double! Enthused by the success of your product you move on to version 3.0 and it's about at this point that you begin to sense some nervousness from your test team. Testers always seem to be a hyper-critical bunch, it is their job you know, so you brush off that antsy feeling, excited by your increasingly successful product. By about version 12.0 you realize what the testers were all nervous about:

Note: The numbers are fake, but the problem is real.

Let's say 1 developer produces 1,000 lines of code each product cycle. Can you see a pattern here?

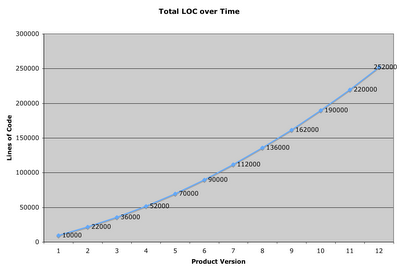

The graph of code growth looks like this:

The graph of code growth looks like this:

The number of testers you have working on the product must either increase with your code base or you're doomed to shipping a product of lower quality, eventually. Also, incase you're wondering, increasing your testers in proportion to you code base has some pretty negative financial implications as does pushing out your ship date to make room for more testing.

The number of testers you have working on the product must either increase with your code base or you're doomed to shipping a product of lower quality, eventually. Also, incase you're wondering, increasing your testers in proportion to you code base has some pretty negative financial implications as does pushing out your ship date to make room for more testing.

If that were not enough, there's some pretty solid evidence that that even the original 10 testers were insufficient for the initial 1.0 product code base, let alone the scaled and additional load that has grown over time! Exhaustive, comprehensive testing is simply impossible. So while in version 1.0 the testers had to make intelligent priority judgments about what to test, in version 12.0 the testers have to basically divine the future if they have any hope of getting to the critical bugs manually!

It really is this hard.

The professional testers I know have an enormous challenge at hand and must be so absolutely decisive about where they spend their time, it amazes me. Sometimes new testers, or those unfamiliar with software development, will simply think, "Hey, all I have to do is find all the bugs!" and they'd be wrong. What they have to do is find every important bug and verify that every critical code path is working. (And loads of other stuff, but that's for another essay...) You see, at the core of professional software testing, there is a built in, super advanced, internal priority system. Great testers seem to have an efficacy gene that allows them to explore the areas that are most important and identify the worst bugs. This is a skill and an art and the world could use more great software testers.

The Automation Pill

Given all the forgoing, it is absolutely incredible to me, that once a tester is given the chance to write code to automate some of their work, somehow, for some reason, this priority system goes into stealth mode. I don't know why this is. Perhaps it's the part of the brain that you use to write code messes with the part that compares the relative costs of automation. Perhaps it's the dream, "If only I could automate all the 1.0 feature testing, at least in 2.0 I could focus just on the new, fun stuff." It could be, and sometimes is, a manager, long lost touch with what core testing is all about, and now looking for ways to "drive efficiency and reduce costs" asks for something as silly as 100% automation. It could be a million things, but this is for sure: Test Automation is a fantastic tool that I believe can help with the problem mentioned above, but like most tools, when it's miss-used, it hurts.

When to Automate?

So when do you automate your tests? I don't know, for sure, but I do have some questions you might consider when making the decision. All of these questions have a common theme, and it is this: What is my return on investment for automating this test?

Script Death

When you invest time to write an automated test, you implicitly lose time you could be using to find and file bugs. That lost time will only pay off over time if your test continues to be valid for certain number of test runs. The best way I've heard this described is "script death."

When you write the script for the first time, you give it life. It lives, as long as you don't need to modify the script in any way, and the results of the test continue to be valid. If the script has a bug in it, or the product under test changes, or a new OS version changes an assumption, or a new CPU comes to town and causes your test to become invalid, your script had died and you need to re-examine if you are going to invest the time to fix it or not.

Update: I had forgotten where I had read this concept, but it was years ago. Thanks to a link, I found Bruce McLeod's weblog and a link to Brian Marick's 1998 article on this very subject of When Should a Test Be Automated?. It's a great read, I highly recommend it. This was one of the first articles I read on test automation back when we were just starting our Mac automation system.

When you write a test script, or go to update a test script because of "script death" consider the following questions. I'm sure there are other questions I've left out, but this is a start:

Since the pay back in test automation always comes back as the script is run, will it be run many times? Automating to ensure no regressions in a critical area, like testing that a security hole is plugged, or testing a core area, like file open, and file save would be great candidates for automation because the cost of a regression in these areas is very high, and they'll be run with each new daily build.

Some tests might not be core or critical, but the testing involved is mind numbing and easy to mess up. A good example of this might be, say, opening 1,000 user documents and making sure you app doesn't crash. :-) Tedious testing makes unhappy testers, and a happy tester is a productive tester.

For the automation to be worthwhile it must verify something! I've seen far too many glorified crash tests marked as automation. If you don't have verification in your automation code, then the only time it's going to fail is when you encounter a crash, a good thing to be sure, but far short of the scripts testing potential. When writing your script, you'll need to consider what methods you'll use to get data back from the system for verification. Find or make APIs that you can use (AppleScript can be very useful for this, hint, hint.) Screen shot verification is fraught with difficulty. Avoid it if you can.

One thing that will cause script death just about faster than anything else, is the product changing. This is why scripting to the API, (You do have an API right?) is so much better than scripting the UI. Typically, the UI changes much more frequently than the API ever will. Either way, consider if the feature is new and undergoing lots of change. If so, avoid automating your tests around the feature until it has settled down. (This can mean toward the end of the product cycle, which is when you are busiest looking for those show stopper bugs.) Just know, that if your test script doesn't get much value this product cycle, it will in the next provided the feature doesn't change.

Automate around things that can be verified from a dynamic oracle. All verification will need some kind of code that says, "I'm expecting X did I get it?" If you are encoding in your test script the definition of "success" how sure are you that what is "correct" will not change? If you are not very sure, move to another area for automated testing.

If it's easy to automate something and the probability is low that things will change, go for it. A good example of this is automating your setup and install testing. These kinds of tests are going to be done over and over again and most installers have some kind of script-ability built in.

As you near the end of your project cycle, the chances that your automation will earn back it's investment in the current project cycle diminish. At the beginning, things are too turbulent. The best time to write automation is about the middle of the cycle, when things are mostly stable, but there are still lots of builds left to test.

Some scripts are easy to write and the verification easy to setup for your English builds, but once you localize your project "script death" becomes rampant. Keep this in mind. If you are writing a script, how hard will it be to localize the script when the time comes? Can you write it "OneWorld" from the beginning? If it is almost certain your script will die on the localized builds, don't plan on using automation to augment your localization testing with out significant work on your test scripts.

Investigation is by far the most time intensive part of test automation. Write your scripts so they are atomic or very specific in what they test. Don't write test scripts that run for 30 minutes, unless that's the explicit purpose of the script. You don't want to be running a script for 30 minutes just to repro the failure that occurs in the 29th minute of the test execution. Write your scripts so they are super easy to read and so that the logs "yell" what the test is and how it is failing. Your automation harness will play an integral role in how easy it is for you to investigate your automation failures.

Small, atomic scripts run great in parallel!

There is often a hope that automation can some how magically babysit a feature just like a human tester running through a test plan. This is simply not true. A human can see so much more of what is going on and pattern match a thousand different things simultaneously. An atomic automated script will have its blinders on and be fully focused on verifying only what you specified when you wrote it. Don't under-estimate how stupid automated tests can be.

In closing, automated testing is not a silver bullet that is going to solve all the problems of testing and software development. It is a valuable tool that you'd be silly not to employ in managing the complexity of software testing. I believe James Bach said it best:

"I love test automation, but I rarely approach it by looking at manual tests and asking myself “how can I make the computer do that?” Instead, I ask myself how I can use tools to augment and improve the human testing activity. I also consider what things the computers can do without humans around, but again, that is not automating good manual tests, it is creating something new."

6 comments:

Nice write up.

//The best time to write automation is about the middle of the cycle, when things are mostly stable, but there are still lots of builds left to test

What do you mean by "Middle of the cycle"? Is not good practice to write automation in first phase itself?

I'm a software developer and while I work at an end-user, rather than at a software house, there is no way that I add lines of code at a constant rate year in year out. More often than not, I'm altering existing lines. So the total LOC is not simply New LOC + Old LOC. Also, not every LOC has the same testing effort.

With respect to when to write automation, the earlier you can write it, the longer you'll have to recoup the costs, so sure, this means writing your automation in the "first phase." The problem is that if you are automating stuff that will be changing alot, you'll have lots of "script death" which will make it even harder to make the automation worth while. For old stable features, sure, writing the automation early on makes sense, but not for new features. Middle of the cycle simple refers to when a feature is stable enough to make writing code around verifying its behavior makes sense.

With respect to the lines of code comparison, I was simply trying to make a point that the total amount of code written adds up for testers in a way that it doesn't for developers. Of course developers don't write code at a constant rate, the round numbers and perfect graph should have drawn that out. A perfect representation of how a projects LOC change over time wasn't the point, it was the testing implications of a successful long term software project. Sure, a well factored object with no integration with anything and no security surface area is going to take less time to test than an exposed, highly integrated piece of code, but they are both similar in that when code changes they need to be tested, and that testing adds up.

I'd be really curious to leanr what you guys at Microsoft use for your Mac test automation. I hope you have a post onthis subject in the future :-)

It seems to me that a combination of Applescript and Unix scripting (if applicable)is the best we can do on the Mac...there is a third part tool I have tried called Eggplant, but it relies on basically screen-shot verification and is pretty fragile.

Well, this reminds me Unit Testing technique. Perhaps, this approach can go further into something like Extream Programming.

Perhaps, not that exaggerated and on a different level.

Post a Comment